How AI is Changing Marketing Attribution

Deep dive into new capabilities, new visualization and new insights (with screenshots!)

Have you ever spent hours (or days) reconstructing micro details of marketing, sales, and partner activity behind a big win to show marketing’s real impact at a board meeting?

AI is fundamentally changing what’s possible: pulling signals from calls, emails, documents, chats, and CRM data, and analyzing momentum across all of it, to understand what actually moves deals forward.

For years, marketers did the best they could with incomplete data and rigid, incomplete measurement frameworks. First-, last-touch and multi-touch attribution weren’t wrong so much as necessary shortcuts, compressed versions of reality in a world where most meaningful signals and full data simply weren’t accessible.

That’s what’s changed. AI is set to massively improve attribution by reconstructing the full story of a deal and letting patterns of influence emerge across people, time, and activity - instead of relying on proxy weighting.

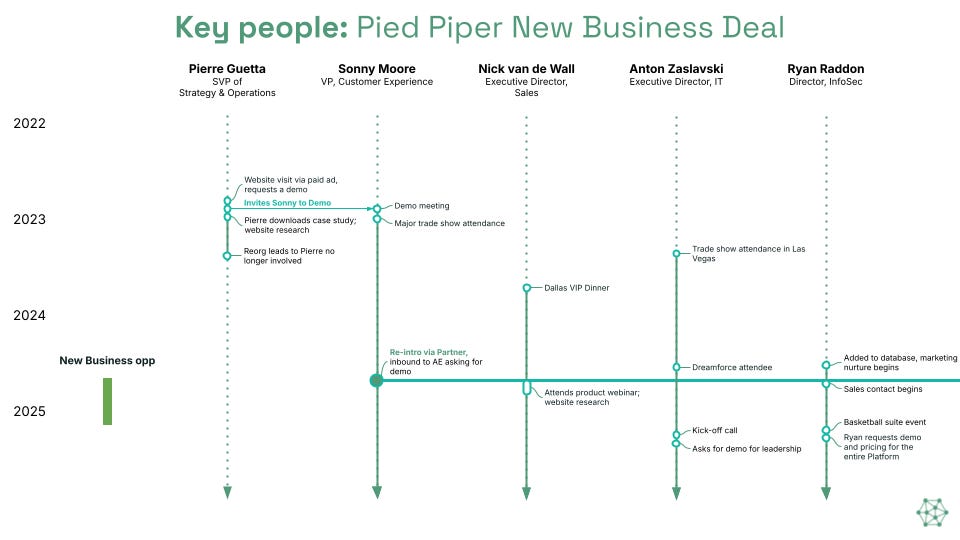

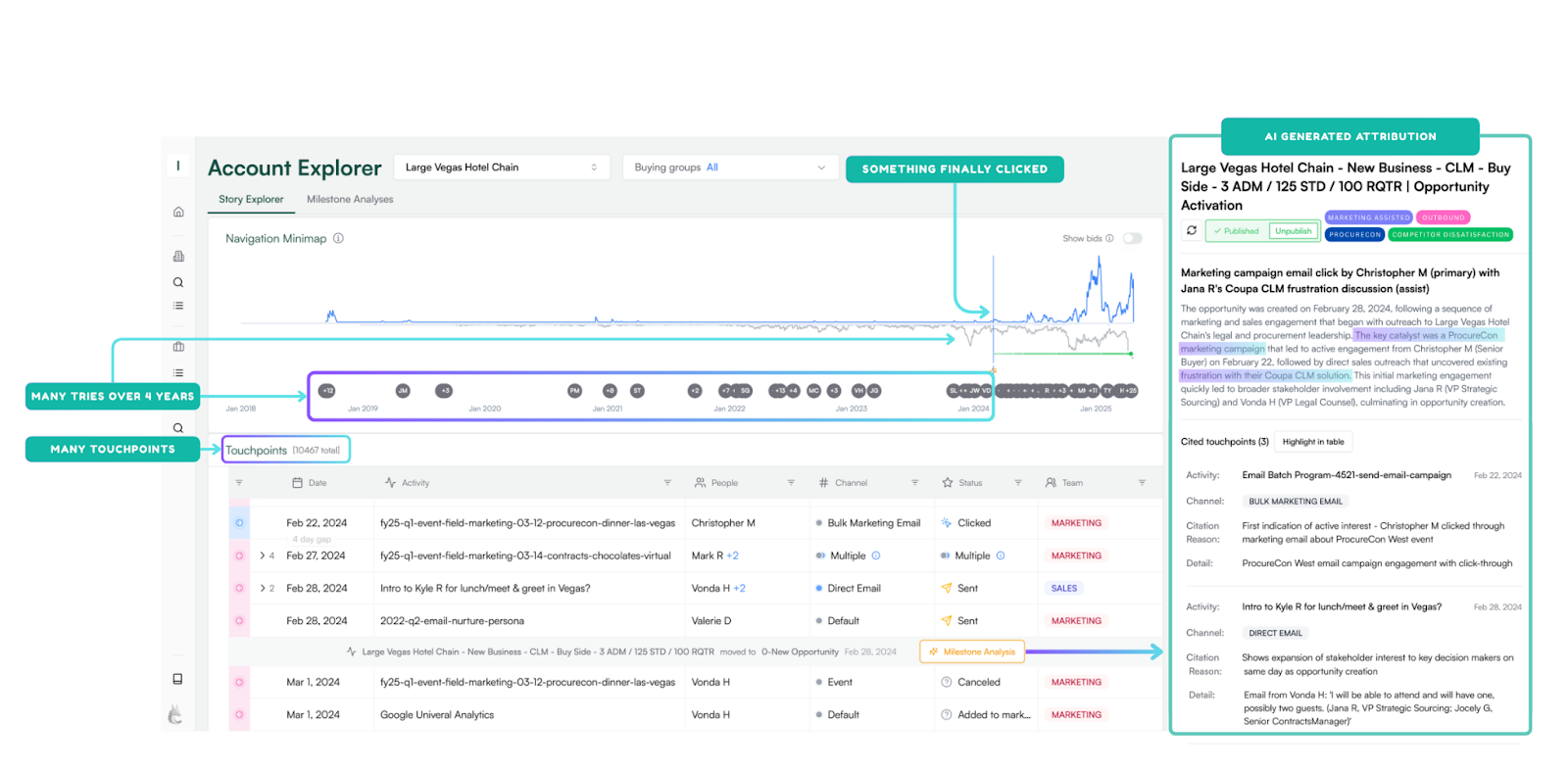

I’ve been watching Mada Seghete and Alex Bauer, co-founders of Upside closely over the past year. What first caught my attention wasn’t just the breadth of signals they were pulling together, but how they visualized them, creating the automated version of the board slides I used to build by hand, with many touches, many buyers, over long periods of time, finally telling a complete story.

After my Brand vs. Demand is a Measurement Crisis post showed that many marketers want to invest more in brand but feel constrained by attribution limitations, I asked Mada and Alex if they would share how they’re approaching marketing attribution in an AI-first world. Below she covers the following with screenshots that will have you drooling : )

Why Traditional Models Fail

What’s Actually Changed with AI

1. Unstructured context is now capturable

2. Analysis that was too expensive is now feasible

3. We moved from data scarcity to data abundanceA Different Approach: Questions Instead of Models

The Prerequisite: Data Quality

What This Looks Like in Practice

How This Applies at Different Stages of Growth

I. The Attribution Problem Everyone Knows

Marketers hate attribution because it has been fundamentally inaccurate. It drives endless debates and turf wars over credit. It over-credits what’s easy to measure: late-stage clicks, form fills, “sourced” fields, and under-credits what’s hard to track: brand awareness, events, partner influence, the dark funnel, internal forwarding chains.

The problem isnt’t effort it is access. Marketers can only measure a fraction of the signals shaping deals, so attribution models are often necessary shortcuts rather than sources of truth.

Plus the data they DO have is bad: Adverity’s 2025 research found nearly half (45%) of the data marketers use to make decisions is incomplete, inaccurate, or out-of-date. And 43% of CMOs believe less than half of their marketing data can be trusted.

II. Why Traditional Models Fail

Deals are too complex for any single model.

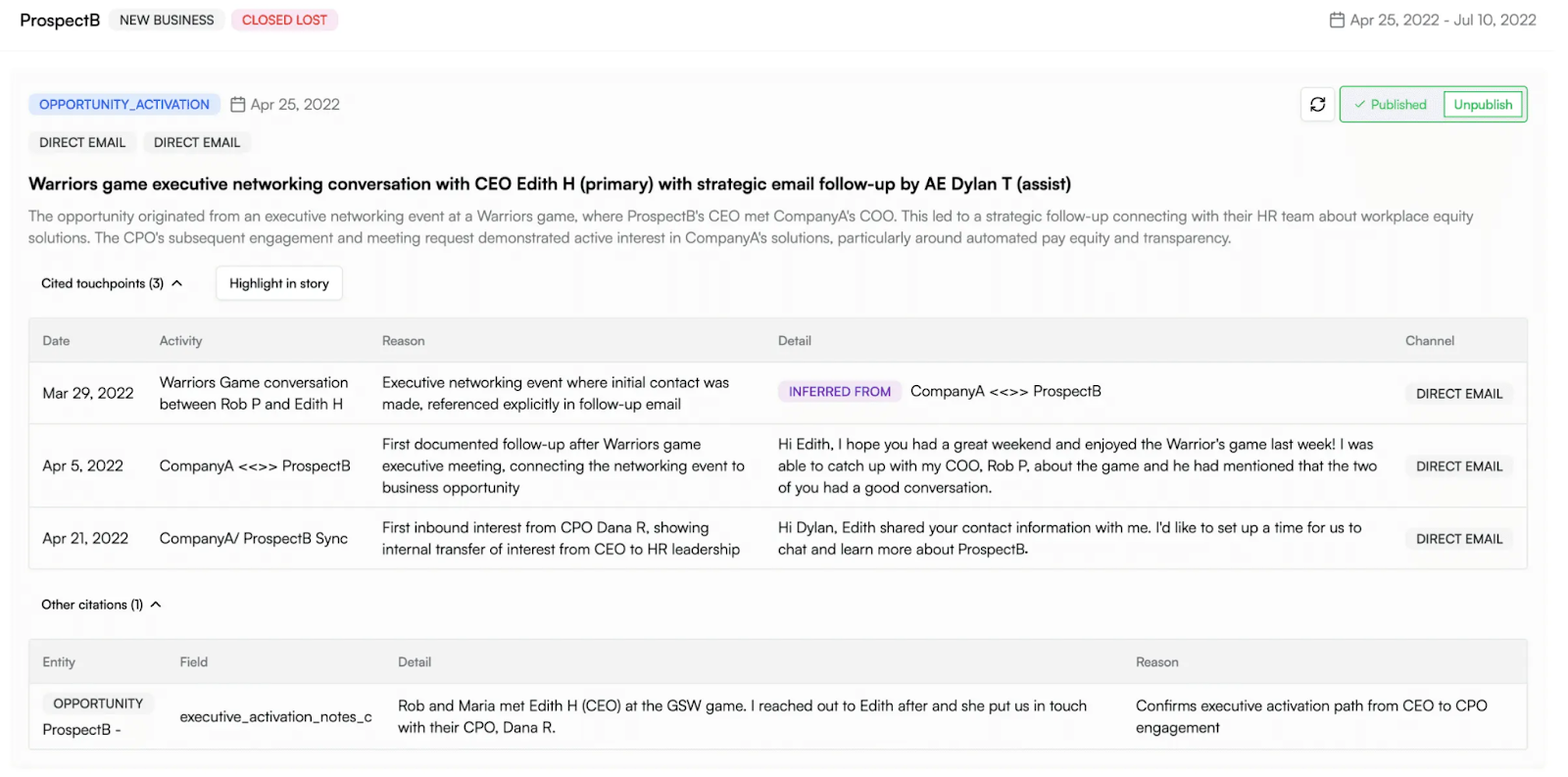

My experience: I spent a full day analyzing 10,000 touchpoints for one customer’s $3M deal. The activation moment? A BD partner convinced an executive to take a look at the tool. The exec forwarded a note to a manager, who forwarded it to the client team. This was in addition to hundreds of marketing and sales interactions that built awareness.

The insight: No rule-based system would catch this. The internal team didn’t even know it happened. And no human would invest this time for every deal.

Even sophisticated multi-touch approaches struggle because:

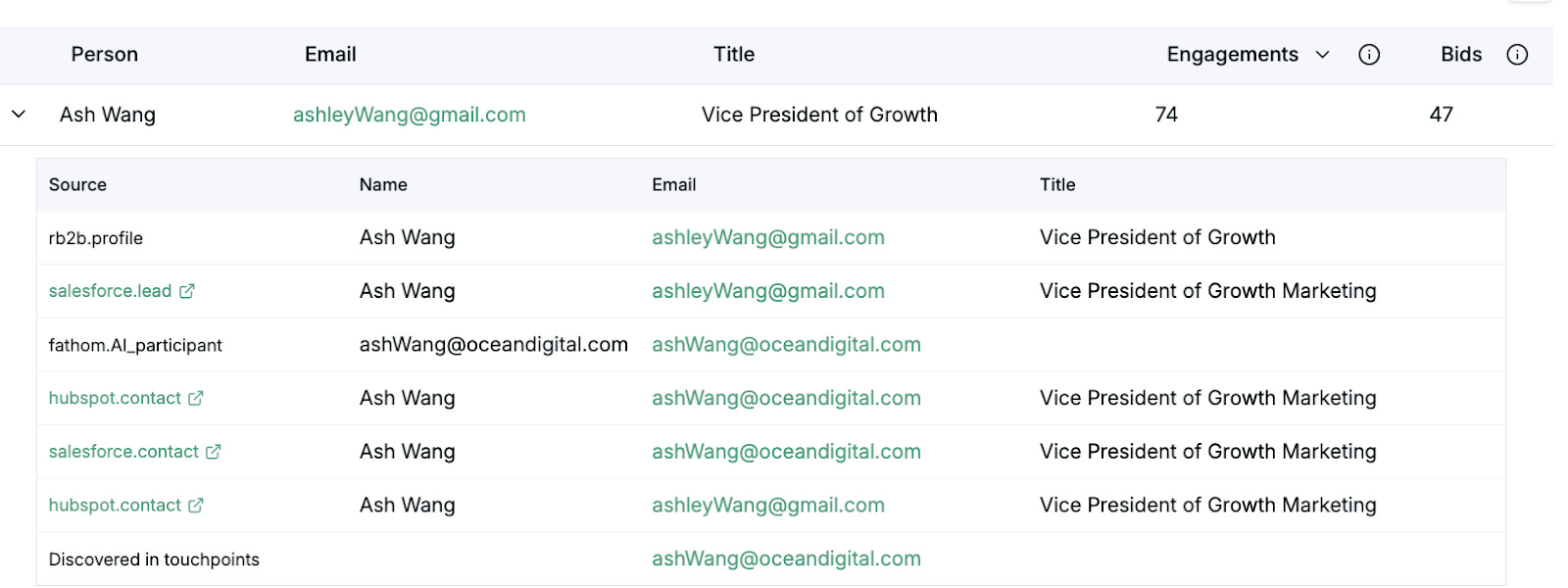

Tracking is incomplete and noisy - identity gaps, cross-device behavior, missing interactions

Image: Example of the type of persona data found in different systems

Correlation is often mistaken for causation

Journeys get oversimplified -everyone wants to know “but what is the one thing that really brought this deal?”

External factors distort observed paths - pricing, competition, timing

True incrementality still requires experimentation

So leaders settle for “good enough” models they don’t trust (because they need something for Finance), while actually running on instinct.

III. What’s Actually Changed with AI

Three fundamental shifts:

1. Unstructured context is now capturable

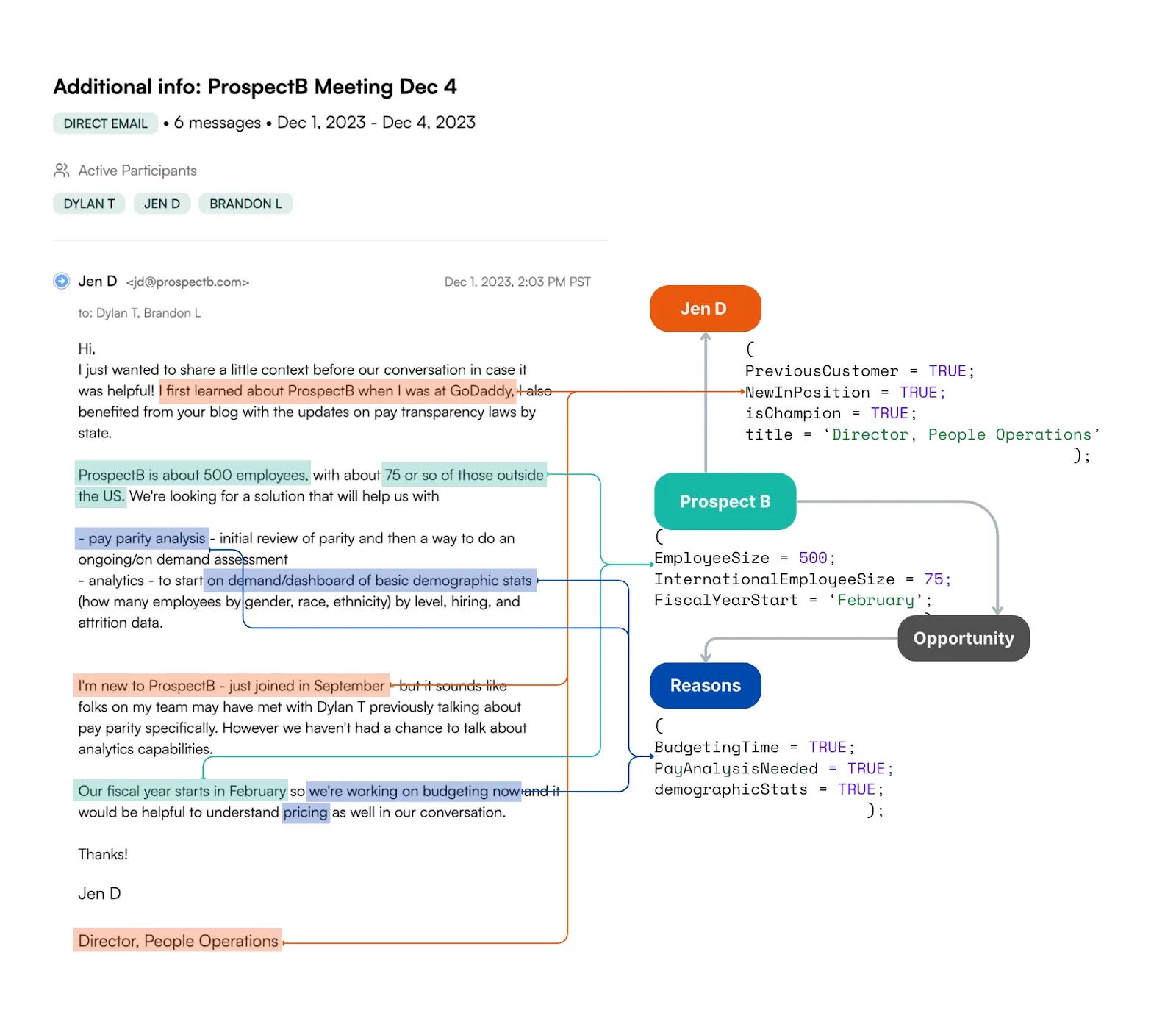

Conversation intelligence extracts signals from calls, emails, and meetings. The “why” behind a demo request often lives in buyer language, not UTM parameters.

Image: Example of how an LLM can extract signals from unstructured data.

2. Analysis that was too expensive is now feasible

Questions like “what sequence of touches typically precedes a closed-won enterprise deal?” have always been theoretically answerable. The problem was the work required: manually reconstructing deal timelines, normalizing across systems to recover signals buried inside unstructured data, and accounting for duplicate or missing touchpoints.

For most teams, the insight wasn’t worth the effort. But thanks to AI, that math has changed.

3. We moved from data scarcity to data abundance

The context exists across calls, emails, CRM, marketing automation, web behavior, and chat. The hard part is stitching it into a coherent, trustworthy account story.

The key reframe:

Static rules like “credit goes to sales unless there was a marketing event within 7 days” aren’t stupid, they have been necessary compromises. When the cost of answering more nuanced questions exceeded their value, compression was rational.

But that’s no longer the case.

IV. A Different Approach: Questions Instead of Models

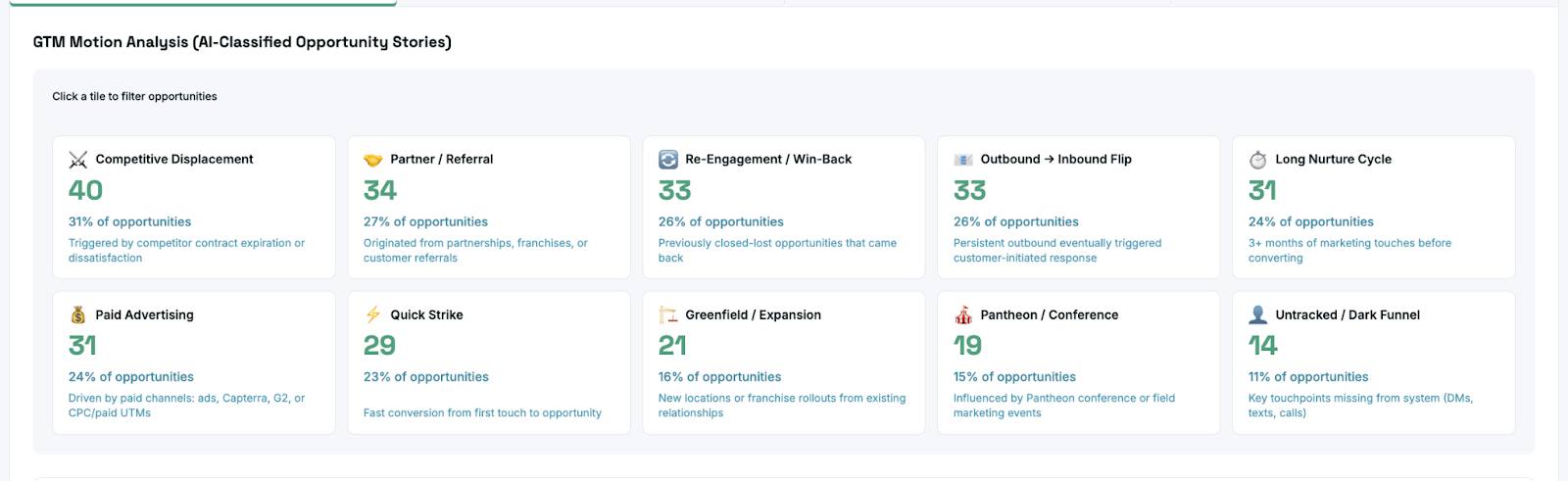

The AI paradigm shift: AI has enabled teams to stop forcing deals into attribution models and start answering specific questions about each deal. Then aggregate those answers into insights and recommendations.

Attribution has always been greyscale—forcing nuanced reality into a few buckets. What’s now possible is answering “what worked” questions in full color, deal by deal, then aggregating patterns.

The questions you can now answer:

What actually activated this deal? Example: “The inflection point was a partner referral to the CFO, followed by an internal forward chain we wouldn’t have seen in CRM.”

Image: Example how AI can make connections between structured and unstructured data to understand the deal story.

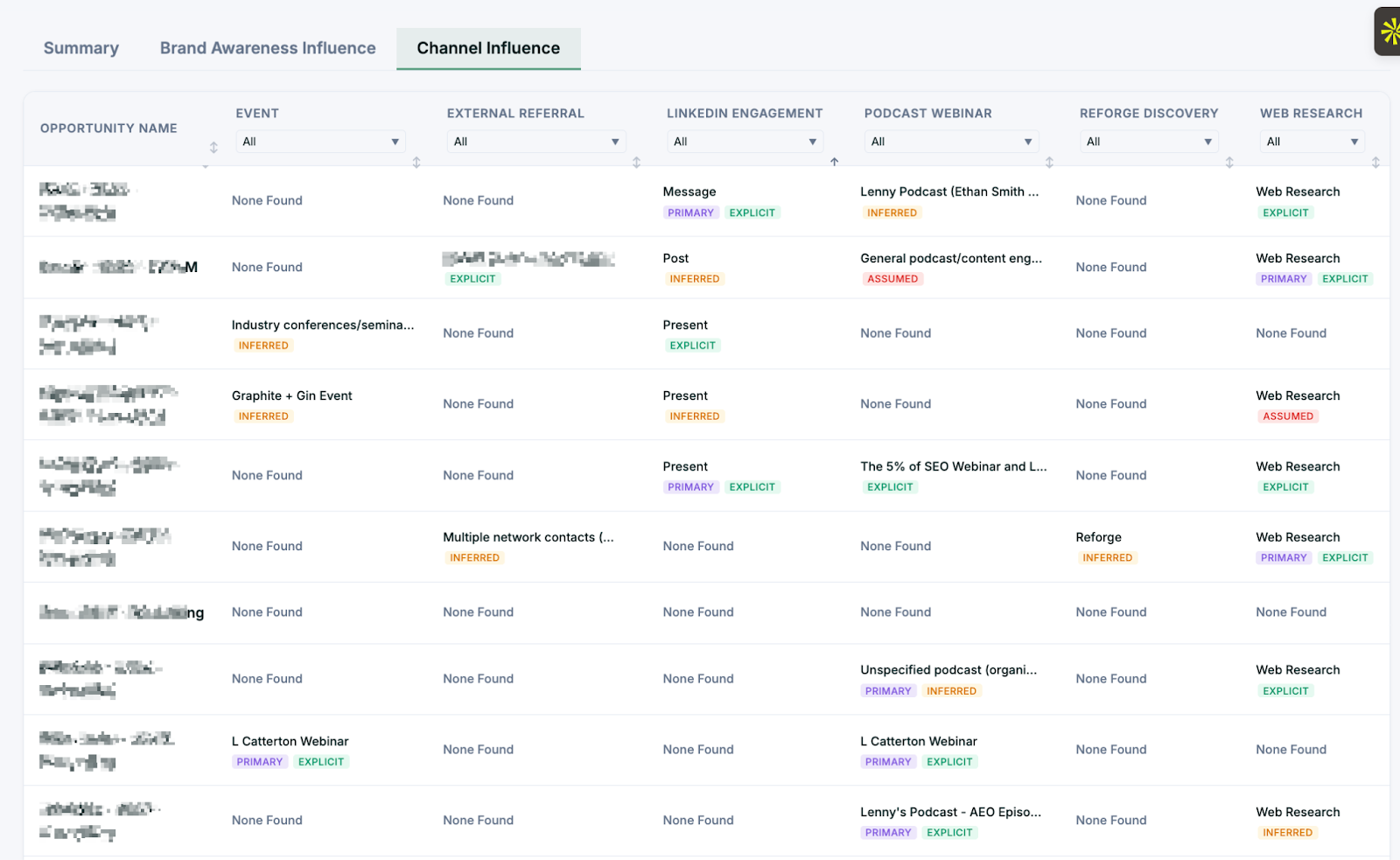

What role did each channel play at each stage? Example: “Paid search drove initial awareness, but the webinar attendance correlated with deals moving from Stage 2 to Stage 3.”

One of our customers found that a third of their revenue in 2025 came from 3rd party referrals that did not show up in any other attribution models.

Image: Example of results from Upside deep research agents looking for specific channel influence on deals .

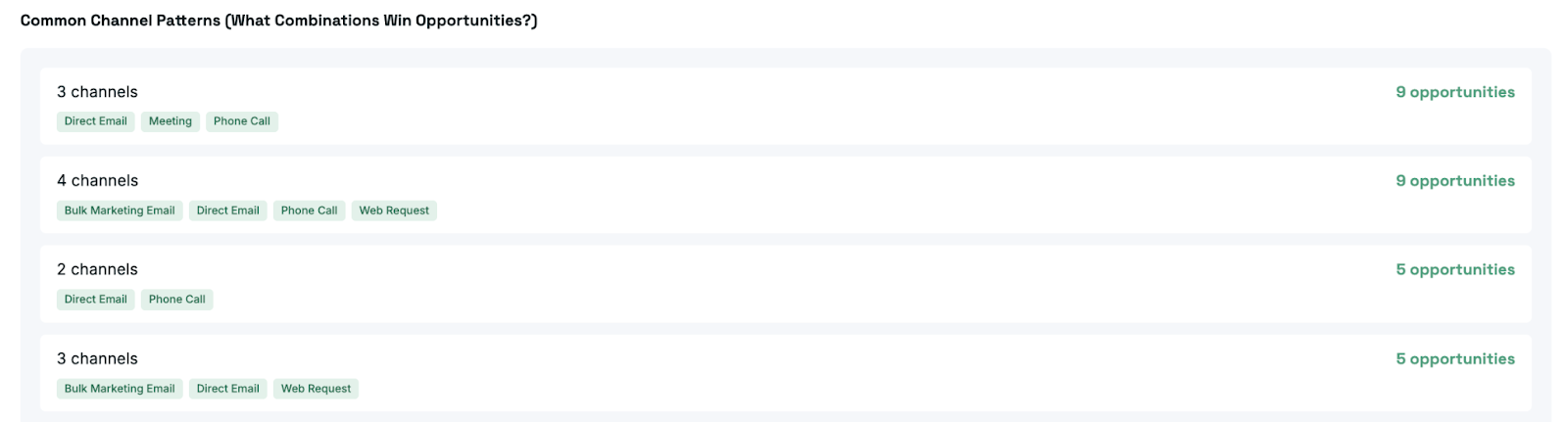

What sequences lead to new opportunities? Example: “Deals that start with content engagement + SDR outreach convert at 2x the rate of cold outbound alone.”

Image: Example of GTM motions detected by Upside AI Deep Research agents analyzing a set of deals.

Image: Example of GTM channel progression detected by Upside AI Deep Research agents analyzing a set of deals.

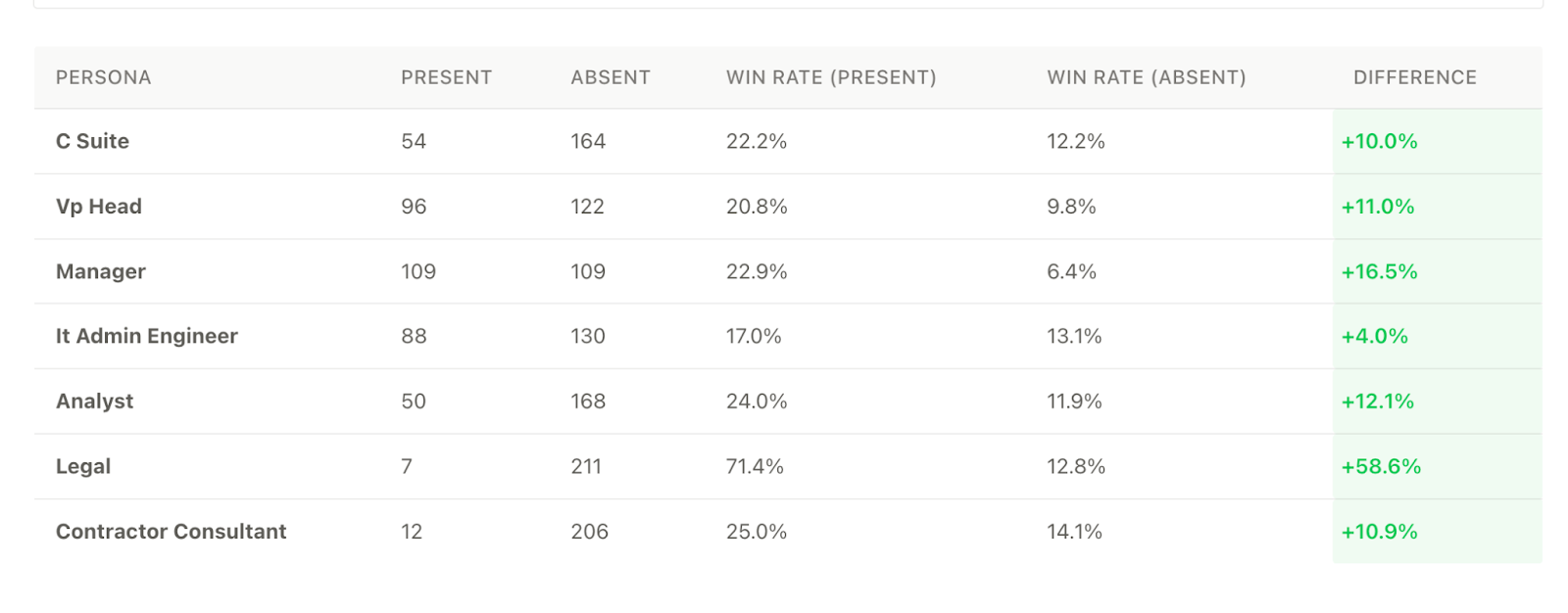

Who are the real buying personas, and which ones do AEs consistently miss? Example: “Technical evaluators appear in 80% of closed-won deals but are added as contact roles in only 30%.”

Image: Example of persona presence detected in buying groups (beyond contact roles in Salesforce) by Upside and impact on win rates.

V. The Prerequisite: Data Quality

The critical caveat: AI is only as good as its data.

Wrong campaign dates, missing statuses, incomplete records. Without careful guidance, mess like this causes AI to reach wrong conclusions just as it would a human. Garbage in, garbage out.

In my experience, the prerequisite for AI-powered deal analysis is a data layer that:

Resolves identities across systems

Reconstructs timelines from fragmented touchpoints

De-duplicates and heals messy GTM data

Image: Example of the type of persona data found in different systems needed to be merged into one identity.

That’s what my team has been building for the past two years. But the principle applies regardless of tooling: if your underlying data is scattered and incomplete, you’ll just get bad answers more quickly. Even AI can’t perform miracles.

AI also needs a way to be checked AND check itself. While AI is getting better, it still makes mistakes and needs a way to be debugged. Any analytics and data tool using AI needs a debug console—a way that a human can check the results.

Image: Visual representation of a deal with filters and citations for AI insights.

VI. What This Looks Like in Practice

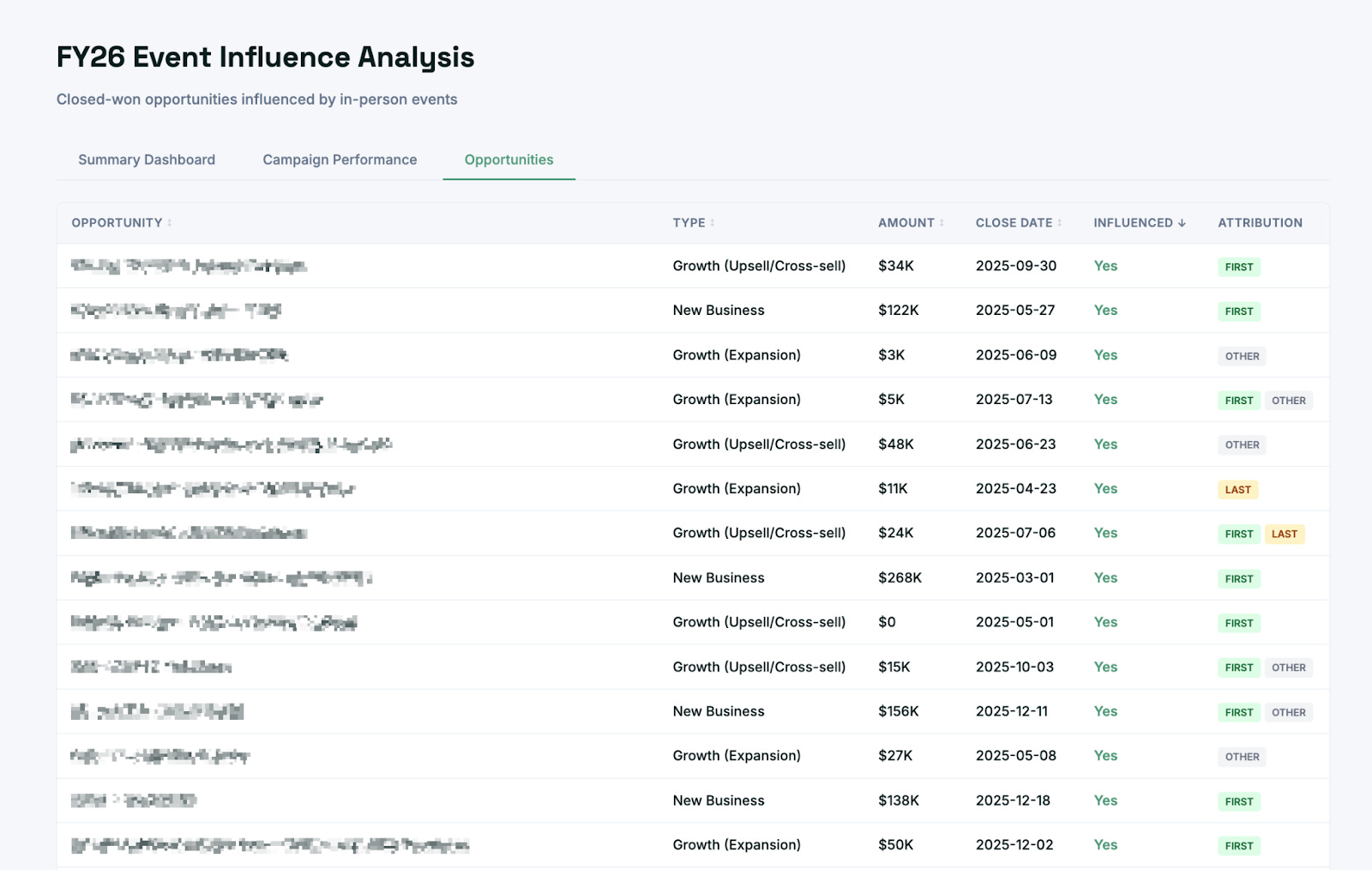

Custom, smart insights for every org: Traditional rules-based models often get it wrong. But you can ask AI to understand what type of influence a campaign actually had on an opportunity, and whether it was the first meaningful interaction with the buying group. AI does this much better than rules-based systems because it won’t get bogged down by edge cases, like someone visiting the website during an event before the attendee list was uploaded, which would make direct traffic look like the first touch.

Image: Events influence on deals sorted by type of touch in the deal cycle defined by customer.

Faster reporting + hidden signals: Another reduced reporting time from 2-3 hours to minutes and identified a 5% pipeline increase from in-person hosted events. This signal was invisible in their previous attribution model.

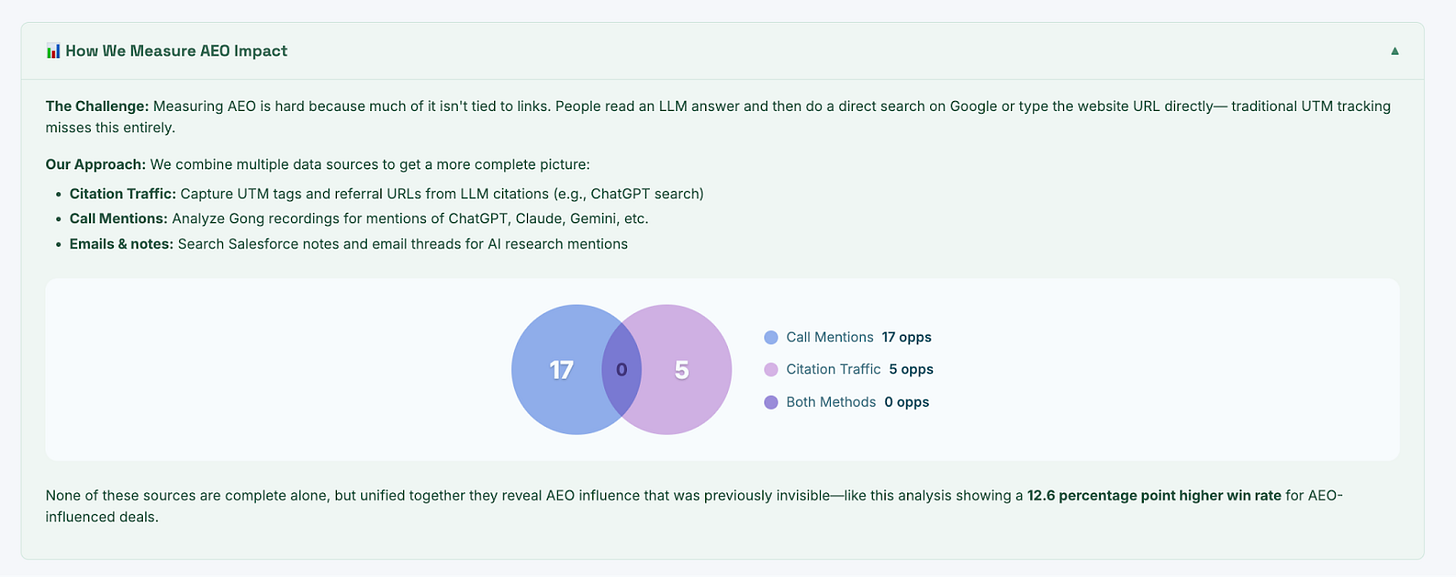

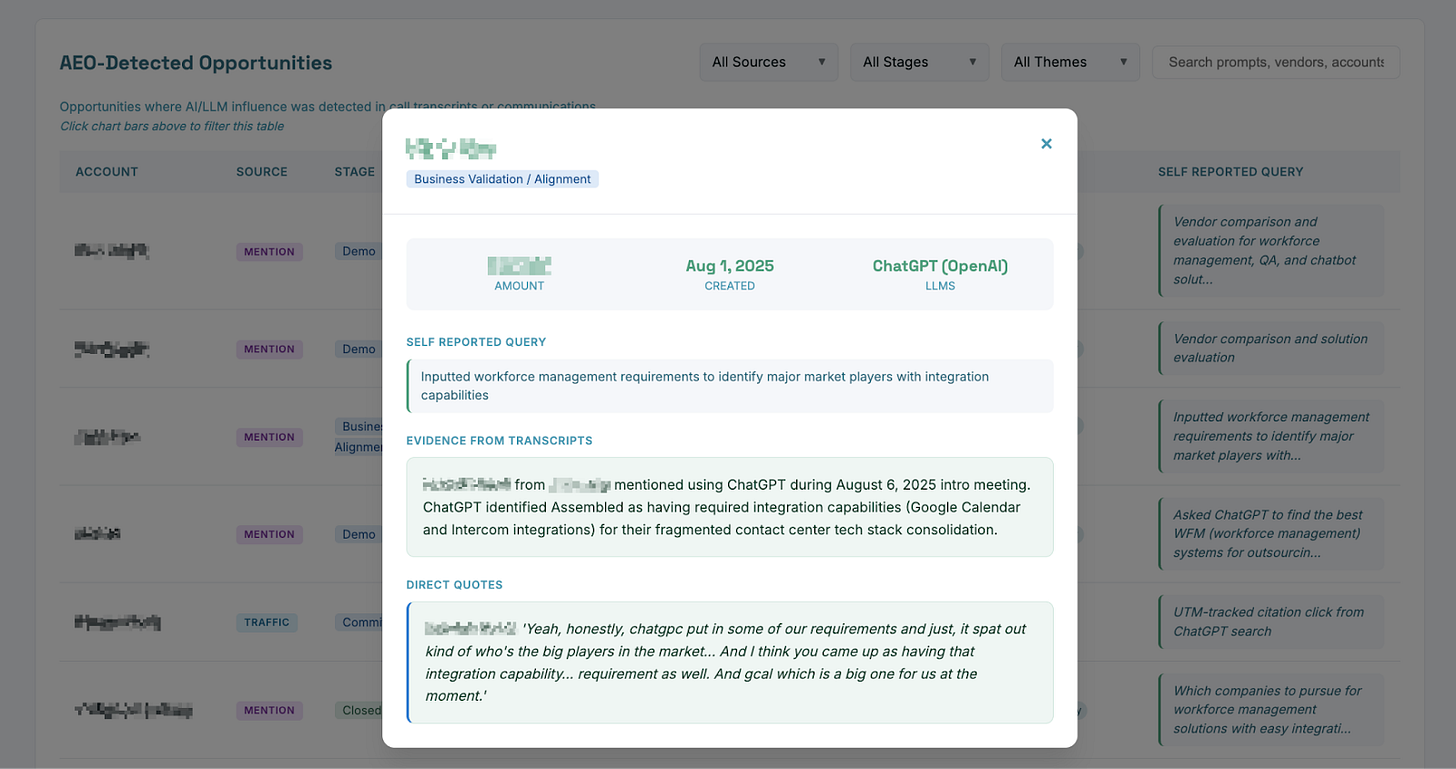

Measuring things that were impossible: AEO influence on pipelines is incredibly hard to measure because people don’t click on links. But in many cases prospects mention how they heard about you on calls or in forms. By combining citation traffic, call mentions, and email threads, Assembled tracked actual AEO influence on the pipeline.

Image: AEO analysis for Assembled team.

VII. How This Applies at Different Stages of Growth

This approach requires enough deal volume to see patterns.

If you’re running a large enough business (meaningful spend across multiple channels, significant deal volume, consistent GTM investment) then asking “what actually happened in our deals?” is one of the most important questions you can answer.

But if you’re earlier-stage, the reality is different. Volumes are lower. Noise is higher. You might do forensic deal analysis and see patterns that don’t generalize yet. That doesn’t mean the approach doesn’t matter. It means how you use it has to evolve with you.

For smaller teams, the goal isn’t statistical significance—it’s building directional confidence. You’re looking for patterns: Do deals that involve events close faster? Do certain personas correlate with larger deals? Even without perfect data, that framing matters.

The mistake smaller companies make isn’t that they can’t do this perfectly. It’s that they don’t do it at all. Or they force everything into last-touch attribution and conclude that nothing except bottom-funnel works.

VIII. Conclusion: A New Question

The wrong question has always been: “Which attribution model should we use?”

The better question is: “Do we actually know what happened in our deals?”

Attribution doesn’t have to be the starting point anymore. When you begin with reconstructing deal reality—who was involved, what signals mattered, how influence accumulated over time—the “what worked” answers emerge naturally from there.

And once you can defend those answers to Finance with real evidence, rather than simplified models or gut instinct, the budget conversations change entirely.

Thank you Mada and Alex for the insights. For more details on Upside, visit upside.tech (This is not a sponsored blog post, just an area of interest for me and my clients)

Carilu Dietrich is a former CMO, most notably the head of marketing who took Atlassian public. She currently advises CEOs and CMOs of high-growth tech companies. Carilu helps leaders operationalize the chaos of scale, see around corners, and improve marketing and company performance.

This is incredible! Thank you for sharing